-

国家自然科学基金国际(地区)合作与交流项目(62311540022):云边协同一体化脑机接口系统关键技术,2023.04-2025.12,项目负责人

-

国家自然科学基金联合基金重点项目(U21A20485):云边端一体化服务机器人云脑平台基础理论与关键技术,2022.1-2025.12,项目负责人

-

国家重点研发计划项目(2021YFB2401900):储能锂离子电池智能传感技术 ,2022.1-2025.12,课题负责人

-

科技创新2030-“新一代人工智能”重大项目(2021ZD0112700):人机增强的大规模多智能体强化学习理论与应用研究,2022.1-2024.12,子任务负责人

-

国家自然科学基金面上项目(61976175):多核互相关熵学习理论与方法,2020.1-2023.12,项目负责人

-

国家自然科学基金重大研究计划重点项目(91648208):人机共融的灵巧柔顺下肢康复机器人交互方法与应用,2017.1-2020.12,项目负责人

-

国家自然科学基金联合基金重点项目(U1613219):面向助老助残的多模态融合下肢外骨骼机器人,2017.1-2020.12,课题负责人

-

国家重点基础研究发展计划(973计划)项目(2015CB351700):视觉认知的脑工作机理及高级脑机交互关键技术研究,2015.1-2019.12,课题负责人

-

国家自然科学基金面上项目(61372152):再生核希尔伯特空间中自适应滤波新方法及应用,2014.1-2017.12,项目负责人

-

国家自然科学基金青年项目(60904054):基于误差熵准则的非线性系统参数辨识,2010.1-2012.12,项目负责人

-

陕西省自然科学基础研究计划重点项目(2019JZ-05):偏差补偿的稀疏互相关熵自适应滤波及其应用,2020.1-2021.12,项目负责人

Research - 陈 霸东

Our research interests span a wide spectrum of topics in signal processing, machine learning, brain machine interfaces, brain disorder and development, brain inspired computing, battery management systems, intelligent robots, swarm intelligence, and so on.

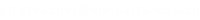

1. Information Theoretic Learning

2. Brain Machine Interfaces

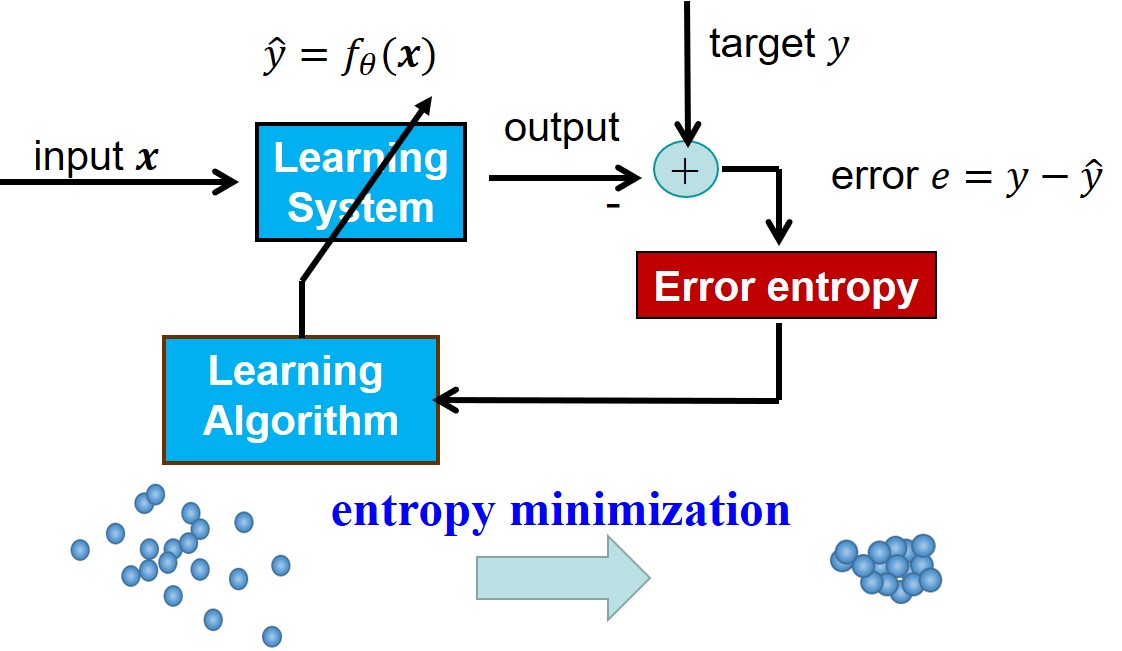

3. Brain Disorder and Brain Development

Psychiatric diagnosis is essential for the early intervention and precision treatment for psychiatric disorder. However, current clinical diagnosis relies solely on symptoms and phenomenology, which may lead to misdiagnosis and delay the early treatment. Consequently, our research focuses mainly on developing useful diagnostic tools using psychosocial, biological, and cognitive signatures beyond clinical symptoms which obtain biologically meaningful biomarkers for psychiatric diagnosis (e.g., Alzheimer, Autism and Depression). In specific, we analysis magnetic resonance images (MRI) to obtain brain network features, by leveraging brain network analysis (e.g., functional connectivity). To ensure these features which is most informative to label, we use information theory (e.g., information bottleneck) to learn compact representations. Finally, these representations are sent to neural networks to make a decision (i.e., psychiatric patients and healthy controls). By combining structure MRI (sMRI), functional MRI (fMRI), diffusion MRI (dti-MRI) and other modalities, we also explore the development patterns of early brain, which could advance our understanding of early postnatal brain development and the develop-related diseases, especially for the critical structures that are not well-studied before, such as subcortical structures and cerebellum. The emotion and behavior data will also be included to find out the relationships between brain structures and emotion or behavior functions.

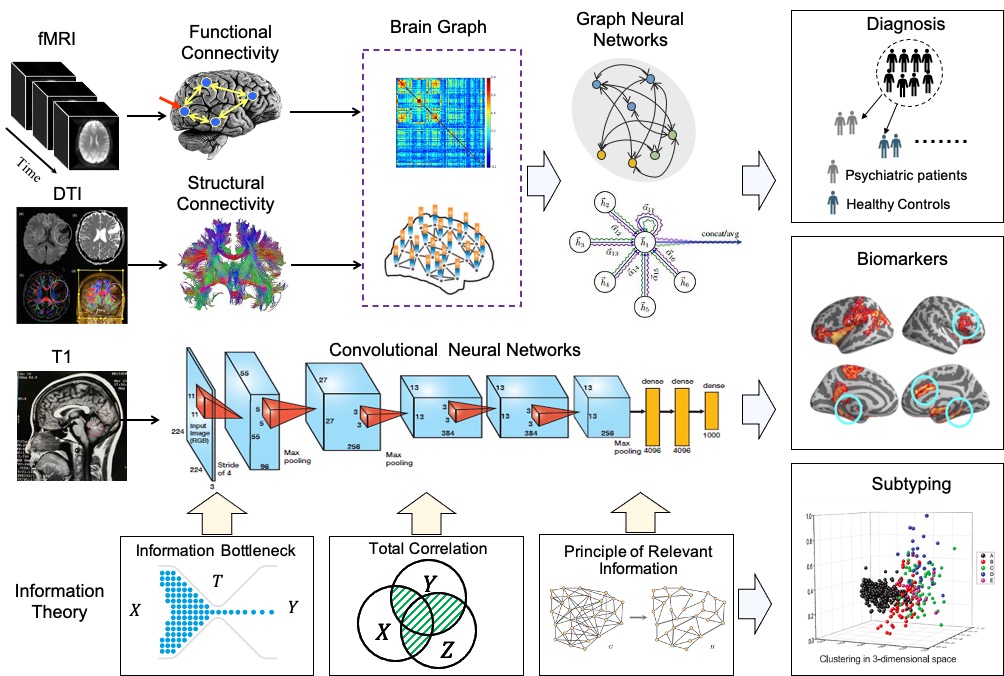

4. Brain Inspired Computing

Brain-inspired computing serves as a crucial cornerstone for advancing artificial general intelligence, encompassing a multifaceted landscape of computational theories, system architectures, application models, and algorithms. Our research focus on presenting diverse training methodologies to train brain-inspired neural networks and spiking neural networks, achieving performance on par with or exceed artificial neural networks in complex recognition tasks with lower power consumption. In addition, we are directing our efforts towards the design of neuromorphic hardware, with the ultimate goal of creating brain-inspired chips and low-power AI chips. This endeavor is aimed at enabling the development of low-power robots and hardware devices equipped with neuromorphic computing capabilities.

5. Battery Management Systems

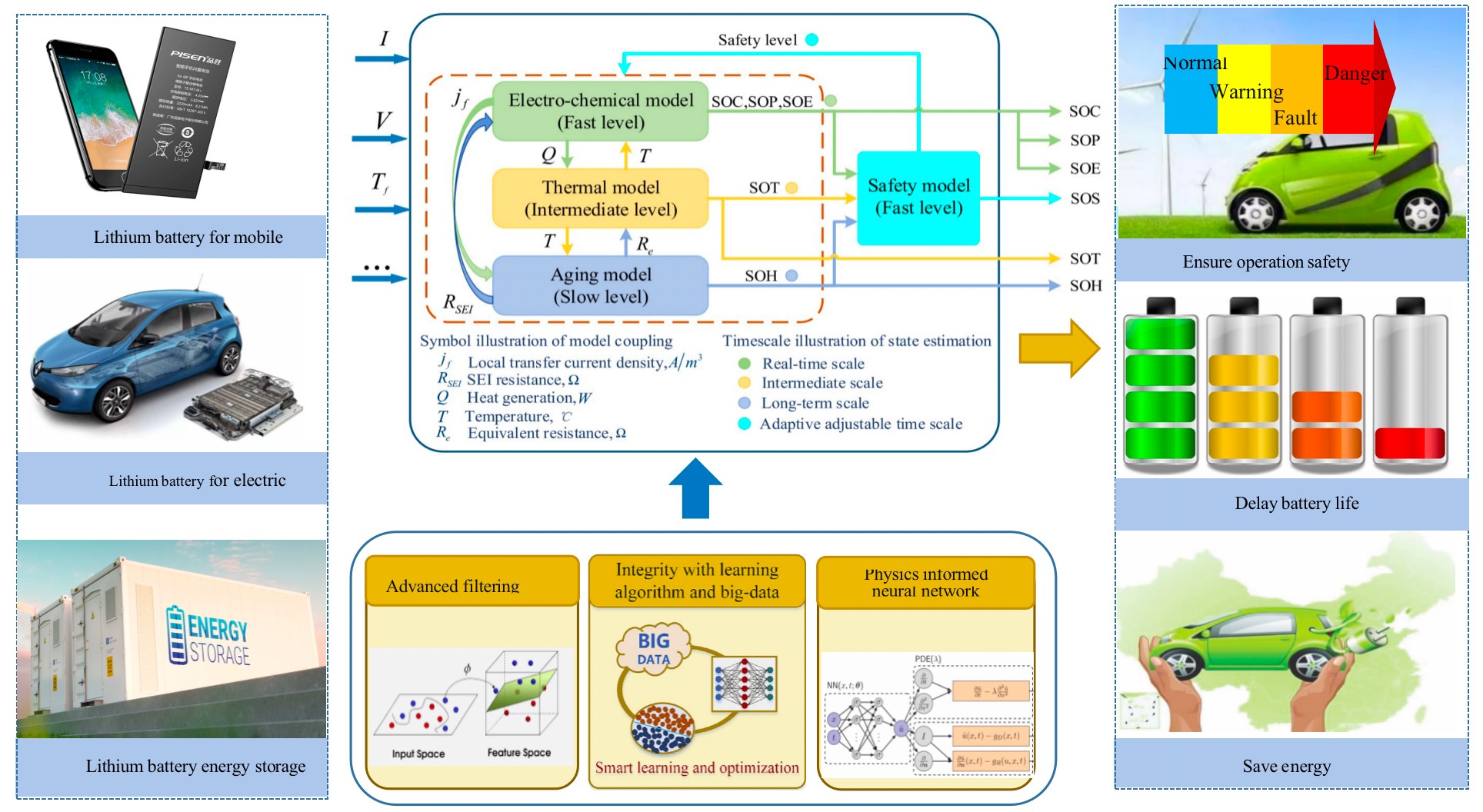

Batteries are presently pervasive in portable electronics, electrified vehicles, and renewable energy storage. Battery management is of paramount importance for operational efficiency, safety, reliability, and cost effectiveness of ubiquitous battery-powered energy systems. Owing to complicated electrochemical dynamics and multi-physics coupling, a trivial, black-box emulation of batteries that senses only voltage, current, and surface temperature obviously cannot result in high-performance battery management systems. How to accurately and robustly estimate and monitor critical internal states constitutes a key enabling technology for advanced battery management. Credible knowledge of State of Charge (SOC), State of Energy (SOE), State of Health (SOH), State of Power (SOP), State of Temperature (SOT), and State of Safety (SOS) is a prerequisite for effective charging, thermal, and health management of batteries. Our research seeks to advance the field of battery management systems by developing state-of-the-art methods in model-based filtering, machine learning, and physics-informed neural networks. These innovative approaches will contribute to safer, more efficient, and longer-lasting battery systems, benefiting a wide range of applications and industries reliant on energy storage technology.

6. Intelligent Service Robot

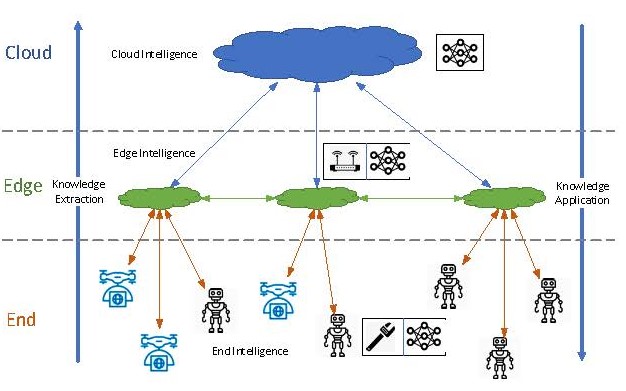

We focus on the “cloud-edge-end” integration robot intelligence model. By taking the advantage of the rich computing resources of cloud computing and the low-latency rapid response characteristics of edge computing, such “cloud-edge-end” integration robot intelligence model allows us extract knowledge from "end" to "cloud" and apply knowledge from “cloud” to “end”. This model empowers robots in terms of computing power, responsiveness, privacy protection and knowledge sharing. In addition, large language models (LLMs) are the underlying technology driving the rapid rise of generative AI chatbots. Tools such as ChatGPT, Google Bard and Bing Chat rely on LLMs to generate human-like responses to prompts and questions. Through the interaction of the Large Language Model and the Visual Language Model, goals and obstacles to be avoided are analyzed in 3D space to help the robot plan its actions. Our research also focuses on how to get the LLM to automatically generate a proper motion planner, a map of corresponding action instructions, and related codes by giving simple natural language instructions. The environmental information is obtained from the VLM (Visual Language Model). The advantage of this work is that there is no need to pre-train the model. We use a large model to instruct the robot how to interact with the environment, which solves the problem of scarce training data for the robot and better realizes the zero-sample capability of robot intelligence.

7. Multi-Agent Swarm Intelligence

(创新港)

(创新港)