Partial differential equations (PDEs) are indispensable in a multitude of disciplines including science, engineering, biology, and finance. As computer technology has advanced, numerical methods such as finite differences, finite elements, finite volumes, and spectral methods have evolved rapidly, proving highly effective in applications ranging from weather forecasting to aircraft design and reservoir exploration. Despite their advancements, traditional numerical methods suffer from the "curse of dimensionality" when applied to high-dimensional problems. For example, solving a 20-dimensional Poisson equation using the linear finite element method would require approximately 1040 degrees of freedom to maintain an error magnitude of 10-2, illustrating the limitations of traditional approaches in tackling high-dimensional problems. Moreover, these methods also face significant challenges in solving two-dimensional and three-dimensional PDEs. These methods often struggle with complex domain meshing, while time-iteration schemes can lead to instability and error accumulation, reducing computational efficiency. The substantial computational demand for high-precision and high-resolution simulations makes these traditional methods less suitable for contemporary needs like digital twins, data assimilation, big data integration, and intelligent design.

In recent years, a variety of methods based on artificial neural networks have emerged to solve PDEs, showing promise in overcoming some of these challenges. These innovative approaches deserve significant attention and require thorough exploration and research to address the challenges previously mentioned. However, despite their potential, neural network methods based on optimization training often suffer from insufficient accuracy, slow training speeds, and uncontrollable errors due to the lack of efficient optimization algorithms.

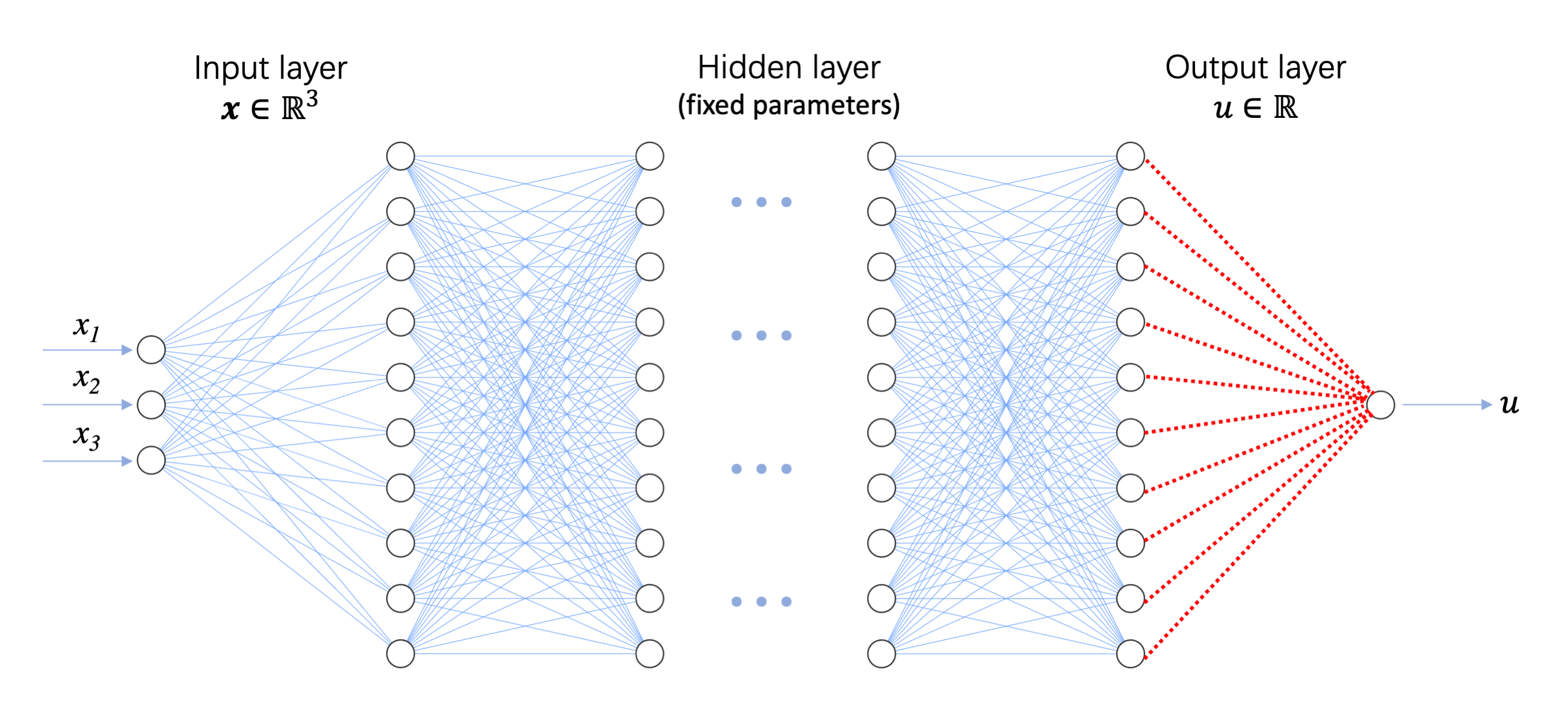

To leverage the strengths of both traditional and neural network approaches while minimizing their limitations, we explore the use of randomized neural network methods for solving PDEs. This strategy employs the powerful approximation capabilities of neural networks to circumvent the limitations of classical numerical methods, aiming to enhance accuracy and training efficiency. Randomized Neural Networks (RNNs) are a variety of neural networks in which the hidden-layer parameters are fixed to randomly assigned values, and the output-layer parameters are obtained by solving a linear system through least squares. This improves the efficiency without degrading the accuracy of the neural network.

Randomized Neural Network: the solid blue line represents the parameters of the neural network that are

randomly initialized and fixed thereafter, while the dotted red line refers to the adjustable parameters.

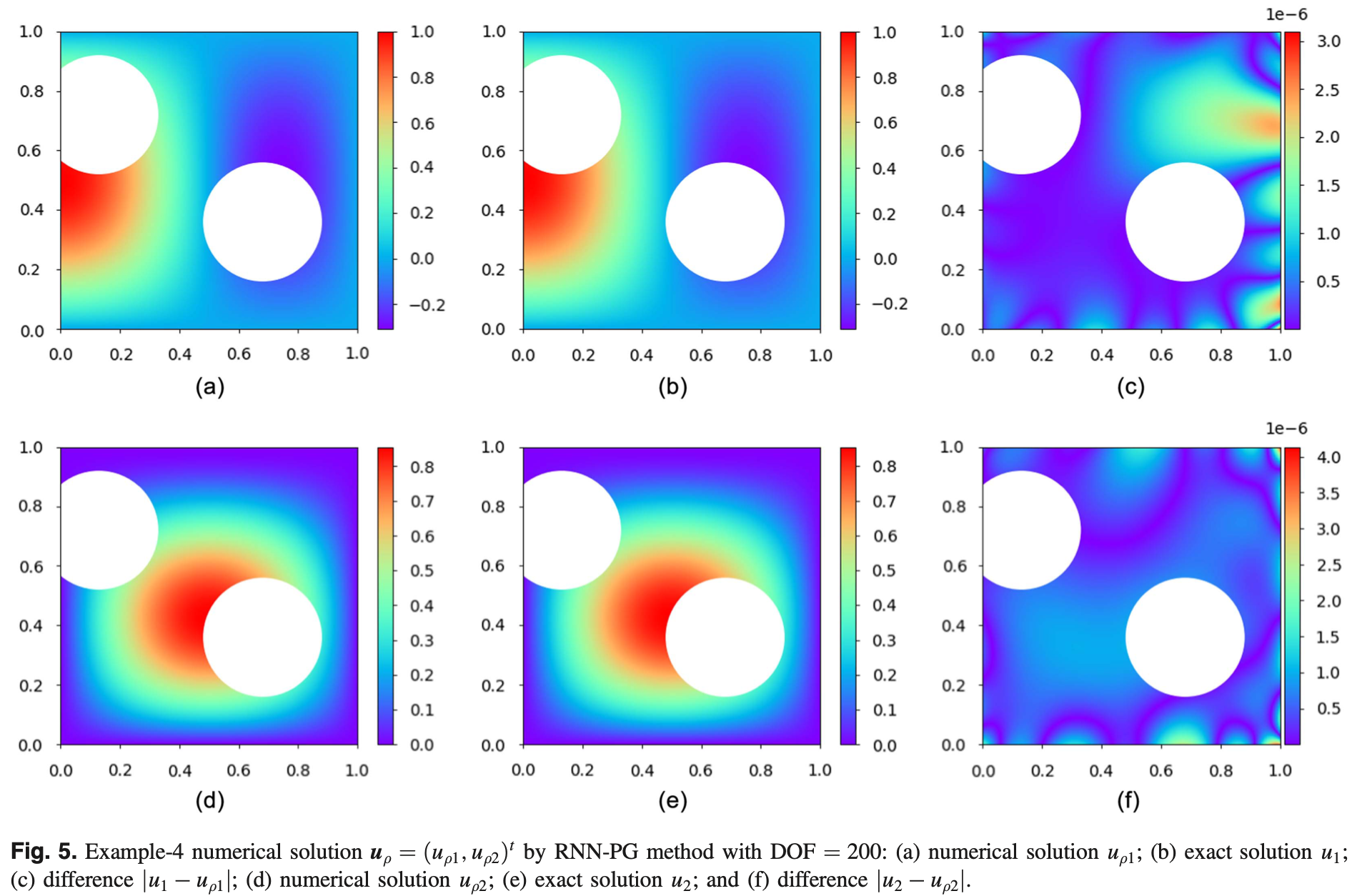

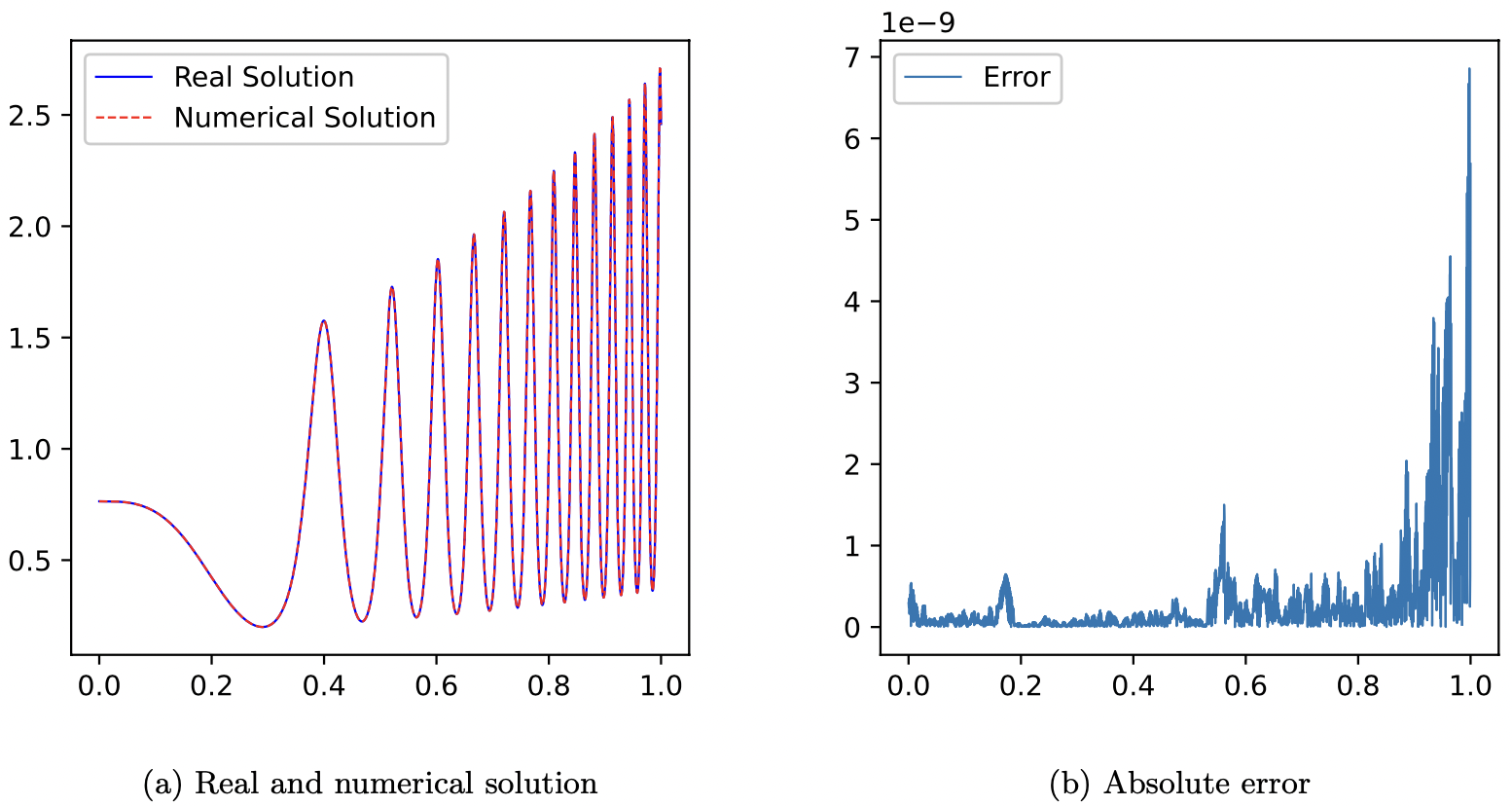

In [16], we proposed a new approach for solving partial differential equations (PDEs) based on randomized neural networks and the Petrov-Galerkin method, which we call the RNN-PG methods. This method uses randomized neural networks to approximate unknown functions and allows for a flexible choice of test functions, such as finite element basis functions, Legendre or Chebyshev polynomials, or neural networks. We apply the RNN-PG methods to various problems, including Poisson problems with primal or mixed formulations, and time-dependent problems with a space-time approach. This paper is adapted from the work originally posted on arXiv.com by the same authors (arXiv:2201.12995, Jan 31, 2022). The new ingredients include non-linear PDEs such as Burger’s equation and a numerical example of a high-dimensional heat equation. Numerical experiments show that the RNN-PG methods can achieve high accuracy with a small number of degrees of freedom. Moreover, RNN-PG has several advantages, such as being mesh-free, handling different boundary conditions easily, solving time-dependent problems efficiently, and solving high-dimensional problems quickly. These results demonstrate the great potential of the RNN-PG methods in the field of numerical methods for PDEs. The RNN-PG methods were developed to solve linear elasticity and Navier-Stokes equations in [17].

In [18], we combine the idea of the Local RNN (LRNN) and the Discontinuous Galerkin (DG) approach for solving partial differential equations. RNNs are used to approximate the solution on the subdomains, and the DG formulation is used to glue them together. Taking the Poisson problem as a model, we propose three numerical schemes and provide convergence analysis. Then we extend the ideas to time-dependent problems. Taking the heat equation as a model, three space-time LRNN with DG formulations are proposed. Finally, we present numerical tests to demonstrate the performance of the methods developed herein. We evaluate the performance of the proposed methods by comparing them with the finite element method and the conventional DG method. The LRNN-DG methods can achieve higher accuracy with the same degrees of freedom, and can solve time-dependent problems more precisely and efficiently. This indicates that this new approach has great potential for solving partial differential equations. The LRNN-DG methods were developed to solve diffusive-viscous wave equation in [19].

In [20], we explored the use of randomized neural networks (RNNs) for solving obstacle problems, which are elliptic variational inequalities of the first kind that arise in various fields. Obstacle problems can be regarded as free boundary problems, and they are difficult to solve numerically because of their nonsmooth and nonlinear nature. Our first method, RNN with Petrov-Galerkin (RNN-PG), applies RNN to the Petrov-Galerkin variational formulation and solves the resulting linearized subproblems by Picard or Newton iterations. Our second method, RNN with PDE (RNN-PDE), directly solves the partial differential equations (PDEs) associated with the obstacle problems using RNN. We conduct numerical experiments to demonstrate the effectiveness and efficiency of our methods, and show that RNN-PDE outperforms RNN-PG in terms of speed and accuracy.

[16] Yong Shang, Fei Wang* and Jingbo Sun, Randomized Neural Network with Petrov-Galerkin Methods for Solving Linear and Nonlinear Partial Differential Equations, Communications in Nonlinear Science and Numerical Simulation 127, (2023), 107518. (Jan 31, 2022, arXiv:2201.12995)

[17] Yong Shang and Fei Wang*, Randomized Neural Networks with Petrov-Galerkin Methods for Solving Linear Elasticity and Navier-Stokes Equations, Journal of Engineering Mechanics 150 (2024), 04024010. (arXiv:2308.03088)

[18] Jingbo Sun, Suchuan Dong and Fei Wang*, Local Randomized Neural Networks with Discontinuous Galerkin Methods for Partial Differential Equations, Journal of Computational and Applied Mathematics 445, (2024), 115830. (arXiv:2206.05577).

[19] Jingbo Sun and Fei Wang*, Local Randomized Neural Networks with Discontinuous Galerkin Methods for Diffusive-Viscous Wave Equation, Computers and Mathematics with Applications 154, (2024), 128--137. (25 May 2023, arXiv:2305.16060)

[20] Fei Wang and Haoning Dang, Randomized Neural Network Methods for Solving Obstacle Problems, Volume on Nonsmooth Problems with Applications in Mechanics,Banach Center Publications, accepted.